GALLERY OF UNSEEN

TLDR: It started as a bunch of strange experiments and ideas on unsupervised generation and converged into another NaNoGenMo project. This post contains the nuances and technical details of this work.

Stage 1: Evolutionary search for aesthetics

First, I was playing with the evolutionary search of the optimal prompt for the SDXL model to maximize the aesthetic scores of results measured by Google’s pretrained NIMA model. As a starting set of prompt parts, I used something I did before in my Freaking Architecture project, so initially, images were biased toward architecture and sculpture. The evolutionary search is not the fastest thing I know, so I wanted to speed up the process and used LCMScheduler with lcm-lora-sdxl to lower the number of inference steps down to 4.

The genetic part was pretty straightforward: As a genome of an individual, I took a float vector of length 100, with probabilities to select one or another piece of prompt; the pieces were like these:

"fluid and dynamic forms",

"golden silver elements",

"googie motifs",

"gray stone",

"hexagonal pattern",

"houses and roads",

"in a ravaged library",

"in a square",

"limestone",

...To evaluate individuals, I randomly sampled up to 15 pieces of the prompt with the corresponding probabilities, merged them together, sampled an image from SDXL, and scored it with NIMA. Since both prompt generation and image generation are stochastic, I repeated both steps up to 10 times and averaged scores. The size of a generation pool was 20; I used generic random mutations and standard cross-over.

The both average and best scores slowly crawled up with the time:

Manual debug showed no signs of degeneration as well:

So I ran it in my colab pro account and left it running overnight. In the morning I got 50K+ of completely insane images; it was impossible to even check them all out.

I decided to focus on those with aesthetic scores greater than 6.0 (a pretty hard baseline), but still, there were 22K+ of them. I had to invent some way to find the most interesting images automatically.

Stage 2: Visual style clustering

To continue experiments, I’ve decided to try marimo— it’s some fresh jupyter analog, and I wanted to give it a try (overall: so far, it looks interesting but a bit unpolished; I should try it again in a half of a year maybe).

Digging through the pile of images, I’ve noticed there are several distinctly different visual styles standing out — like photos, sketches, paintings, and so on. So, I decided to group pictures by visual style somehow, for starters.

The general plan was to embed images into vectors, then lower the dimensionality of latent space, then cluster these low-dimensional vectors, and, finally, explore the clusters.

I tried the DINO (v1) model embeddings first, but the resulting clusters were visually too internally diverse, so I didn’t see any clear corresponding style:

After a short research, I switched to the generic VGG16 model and took only the 16th layer weights as a style embedding. That worked much better. After embedding, I made a UMAP projection of embeddings into 2d space and ran DBSCAN over it.

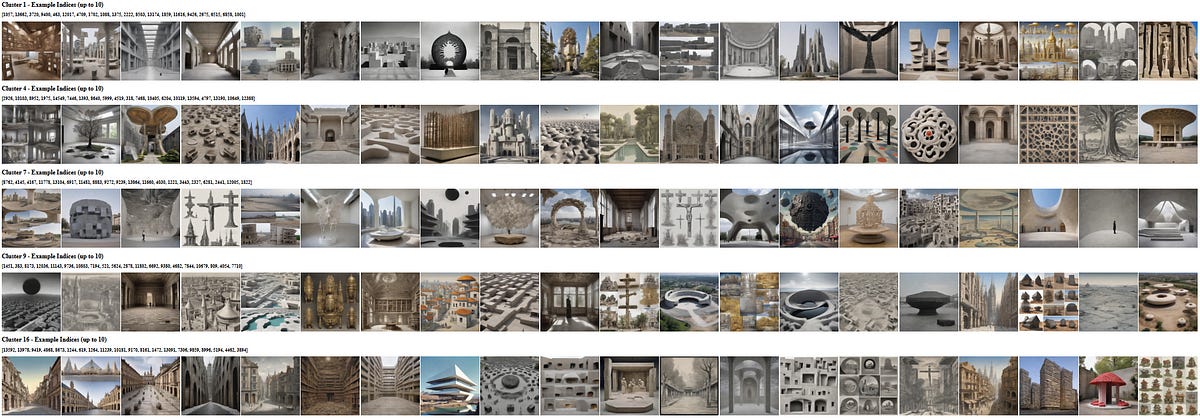

The top 3 style-based clusters had more than 1k images each, and the top 10 had more than 150 images. Visually, these clusters were pretty consistent:

For the rest of my experiments, I took the images from the top 3 clusters — one was all about some huge empty gray rooms; another had drawings, collages, and sketches; the third was something like church interiors and strange semi-organic rooms.

Overall, almost all of these images were pretty good. However, there were still too many very similar ones among them — multiple images of very similar objects; perhaps it was the result of multiple (10x) runs of aesthetic scoring for each individual, so each prompt was used to generate multiple images.

Anyway, I decided to make some deduplication.

Stage 3: Semantic deduplication

To do the deduplication, I embedded these selected images again, this time with the CLIP, since it should capture semantics better. Again, I applied UMAP + DBSCAN to get clusters across this subset of images.

Thus, I decided to take only one image from each semantic cluster, specifically the one with the maximum aesthetic score, and ended with approximately automatically selected 200 images — they had

high aesthetic scores (scored by model),

more or less the same style,

and were diverse enough.

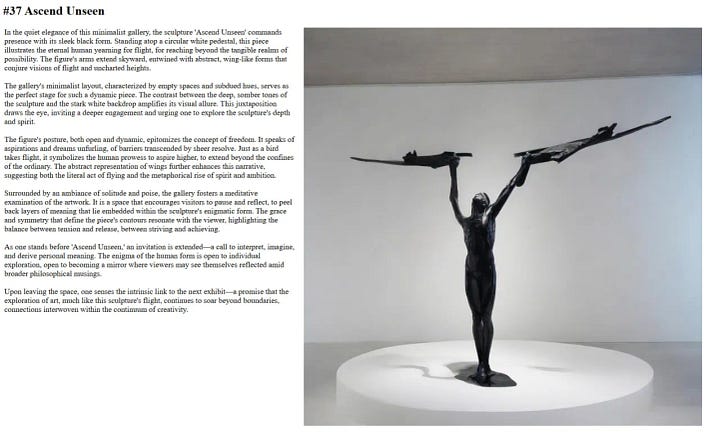

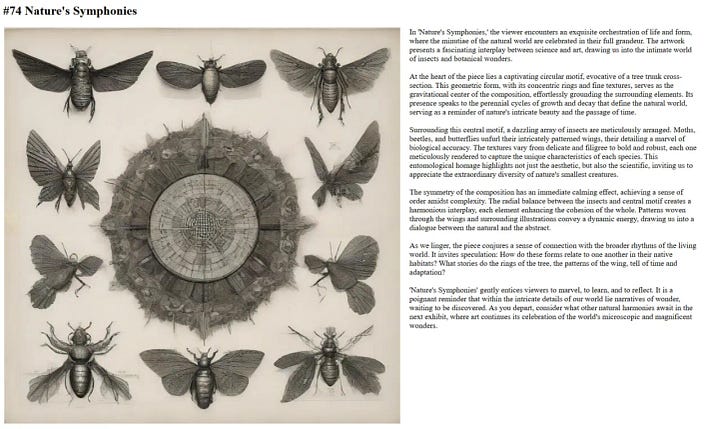

They were also generally more or less about art, sculpture, and architecture, so I decided to convert them into something like a guidebook.

Stage 4: Essay generation

To complete the guidebook, I needed some textual descriptions of my selected images. Indeed, I could just ask some VLM to write these descriptions, but usually, the results of such approaches are weird — such texts are usually full of clichés and general formulations, and when there are many of them, it is instantly obvious that they are very similar in structure.

To address these issues, I used an approach similar to what I did in our recent humor generation project before — I used association generation and a multi-step brainstorming framework. I also generated a short set of hints and recommendations for catalog writers (10 hints).

Finally, I requested OpenAI GPT-4o to generate a short essay about each image, providing it with the description, associations, and a random subset of the writer’s hints.

Stage 5: Final polishing and sharing

After the final cleanup, I finished with 150 images and approximately 52K words of text. To make the layout look better, I searched for some automatic framework for publishers. Eventually, I used WeasyPrint — this easy-to-use python library allows to convert generated HTML to PDF and control page layout details with custom CSS instructions.

Finally, I made a cover — this is the only part I’ve done manually (still using one of the generated images):

The resulting PDF and code are available on my github. I’ve also made a NaNoGenMo 2024 submission to share it with the community; there, I promised to provide more technical details, so that’s the reason I wrote this post.