FREAKING ARCHITECTURE

...an unsupervised pipeline for architectural images generation...

TLDR: We built a pipeline for generating diverse images using neural networks and publish them automatically on Telegram, Mastodon, Bluesky, and Tumblr. Later, we analyzed user reactions to improve prompts. Our findings were presented at HuMaIn@KI-2024. Follow the feeds or learn more on the project page.

A couple of years ago, I decided to investigate ways of possible unsupervised generation of high-quality, but diverse images using neural networks like Stable Diffusion. My goal was to create an automatic end-to-end pipeline that produced as few bad results as possible. I teamed up with an old fellow, s0me0ne, and we began experimenting.

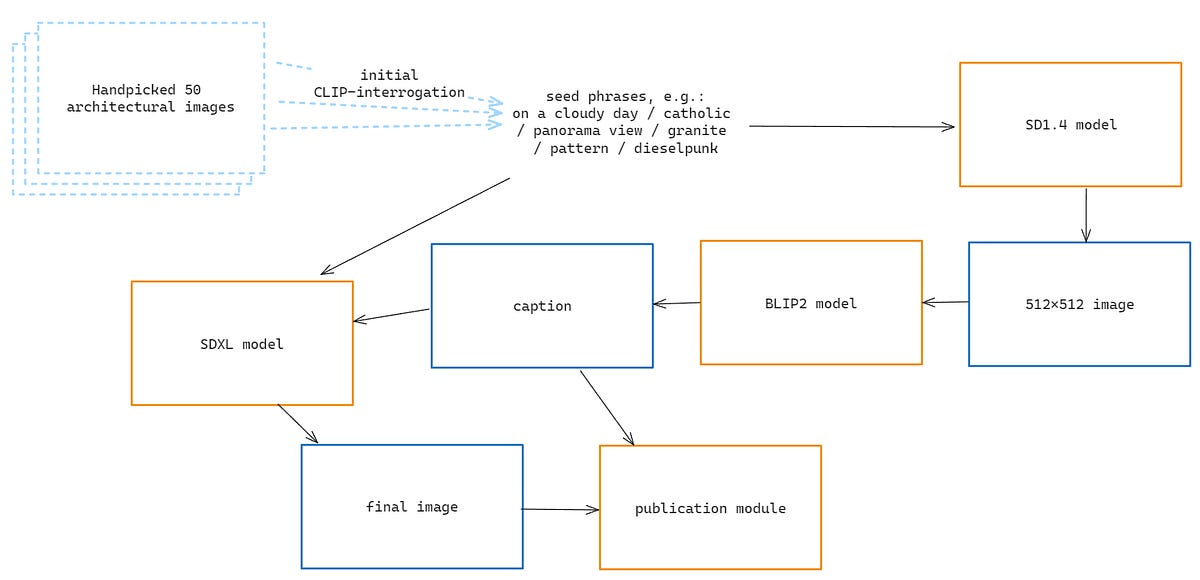

At first, we had a simple setup: random prompt generation based on a “kaleidoscopic” combination of a large list of keyphrases. But as the project developed, things got more complex. Over time, we arrived at a process where that initial prompt was just the starting point. The final image would go through several modality shifts, using three generative networks plus a couple of auxiliary networks to assess quality. Ablation studies showed that every step of the pipeline contributed to improving the results.

Early on, we set up automatic publishing of the images on Telegram to cut off our ability to moderate content. Later, we added feeds on Mastodon, Bluesky, and Tumblr (getting it to post automatically on Twitter and Instagram didn’t work out right away yet).

About a year in, we had another idea. Since the Telegram feed stored a history of user reactions, we could download emoji responses and match them with elements from the original prompts via the images. This allowed us to identify keywords that statistically increased or decreased the chance of getting a reaction (thanks to Vadim Nikulin for helping with the history dumping).

Eventually, we even wrote a research paper, “Machine Apophenia: The Kaleidoscopic Generation of Architectural Images”, speculating on an idea of the Machine Apophenia effect, and presented it this past September at HuMaIn @ KI 2024.

You can subscribe to these feeds via the links above; we randomly publish 3–7 images a day to avoid spamming, and there are already over 4.5K images in the feed. For more technical details, check out the project page.

PS. It’s hard to say if this project is truly finished — every time we thought it was, new ideas emerged. Some, like latent space analysis and auto-generation of NERF spaces, are still open.