123 BYTES PERL MARKOV CHAIN

TLDR: As a part of a funny online competition called Nano-NaNoGenMo we managed to craft the 123 bytes Perl script for a Markov Chain text generation; 139 bytes in total with a shell command (and even shorter if we allow some input file tweaking, see the remark at the very bottom of the post).

The rest of the post gives some clues on the process of optimization — from a clean and shiny 752-bytes rosetta-code implemetation to the end and beyond.

COMPETITION AND RULES

I already wrote something about the annual competition for automatic novel text generation, National Novel Generation Month (also known as NaNoGenMo). During November, participants have to write some computer program which generates a text with at least 50000 words and publish the source code. No other rules apply.

But this year it had a twist — the narratology enthusiast from MIT, Nick Montfort, decided to run a spin-off competition, Nano-NaNoGenMo (aka NNNGM), with only one additional rule — the program should have 256 bytes at most (with the possibility of using any of Project Gutenberg files as input).

I’ve discovered NNNGM just in 3 days before the end but it was so tempting so I decided to join the competition. Next, I thought what is the simplest way to generate plausible texts is to use Markov chains and in my opinion, Perl is one of the best choices for code obfuscation projects (as I mentioned before) so it was going to be a Perl implementation of the Markov chain text generator, despite the fact I didn’t write a line of Perl code last decade.

INITIAL CODE AND LOGIC

First of all, I took the rosettacode’s Perl implementation of the Markov chain text generator as a starting point. Let’s read it:

use strict;

use warnings;

my $file = shift || 'alice_oz.txt';

my $n = shift || 3;

my $max = shift || 200;

sub build_dict {

my ($n, @words) = @_;

my %dict;

for my $i (0 .. $#words - $n) {

my @prefix = @words[$i .. $i+$n-1];

push @{$dict{join ' ', @prefix}}, $words[$i+$n];

}

return %dict;

}

sub pick1 { $_[rand @_] }

my $text = do {

open my $fh, '<', $file;

local $/;

<$fh>;

};

my @words = split ' ', $text;

push @words, @words[0..$n-1];

my %dict = build_dict($n, @words);

my @rotor = @words[0..$n-1];

my @chain = @rotor;

for (1 .. $max) {

my $new = pick1(@{$dict{join ' ', @rotor}});

shift @rotor;

push @rotor, $new;

push @chain, $new;

}

print join(' ', @chain) . "\n";To keep track of the following changes it’s useful to understand the basic logic steps of this code:

let’s assume we have an input file with the text all in one line (without line breaks),

first, we read all the words from input file into list @words,

append the very first $n words to the end of the list (otherwise it can preliminary stop whenever the last $n words occasionally appear as a current key),

build a hash %dict with each $n words in a row as keys and lists of possible next words as values,

starting from the first $n words as a seed, take the next one as a random choice from the list from %dict by last seen $n words as a key, stored in @rotor , accumulate all generated words in @chain and print them at the end.

Note, this version has 752 bytes length.

OPTIMIZATION CHRONICLE

324 bytes:

For simplicity I started with some basic preparation:

got rid of argumets parsing and fixed the input filename to a.txt,

replaced constants with inline values (fixed them to $max=200, $n=3),

removed some unnecessary syntax sugar, spaces and tabs,

renamed functions and vars to 1-char names: pick1() -> z(), build_dict() -> b(), @words -> @w, %dict -> %d, @chain -> @c, $new -> $e, @rotor -> @r.

Let’s see what we had now:

sub b{my($n,@w)=@_;%r;

for $i(0..$#w-$n){@p=@w[$i..$i+$n-1];

push @{$r{join' ',@p}},$w[$i+$n];}

return%r;}

sub z{$_[rand@_]}

$t=do{open$h,'<','a.txt';local$/;<$h>;};

@w=split' ',$t;

push@w,@w[0..2];

%d=b(3,@w);

@r=@w[0..2];

@c=@r;

for(1..200){

$e=z(@{$d{join' ',@r}});

shift@r;

push@r,$e;

push@c,$e}

print join(' ',@c)."\n";225 bytes:

Now, I had to make things a bit uglier:

removed unnecessary\n symbols,

put b()(previously known as build_dict()) inline at the only place where it was called from,

put a file-reading code inline into the split’s second argument,

pulled the last print inside the chain iteration cycle to remove the longjoin construction.

sub z{$_[rand@_]}@w=split' ',do{open$h,'<a.txt';<$h>};push@w,@r=@w[0..2];%d=do{for$i(0..$#w-3){@p=@w[$i..$i+2];push@{$s{join' ',@p}},$w[$i+3];}%s};print"@r ";for(1..200){$e=z(@{$d{join' ',@r}});shift@r;push@r,$e;print$e." ";}As you may notice, here I had 225 bytes so I already met the goal of NNNGM to squeeze it into 256 bytes. But as Hunter Thompson once said — “Anything worth doing, is worth doing right.”

So I decided to continue and asked for help an old friend of mine, s0me0ne (Самуан Ункновн), who is known as a crazy Perl hacker since the previous century. Together we continued exercises.

Since we decided to play with Perl interpreter’s command-line arguments, from this point I’ll give two byte counters as a progress indicator — the size of the Perl code payload as well as the total size of the code including shell command.

199 / 214 bytes:

Here we had three mild changes:

switched to reading from stdin instead of opening the file explicitly,

removed the cycle var $i, since it was unused anyway,

used print"$e " instead of print$e." ";.

perl -e'sub z{$_[rand@_]}@w=split" ",<>;push@w,@r=@w[0..2];%d=do{for(0..$#w-3){@p=@w[$_..$_+2];push@{$s{join" ",@p}},$w[$_+3];}%s};print"@r ";for(1..200){$e=z(@{$d{join" ",@r}});shift@r;push@r,$e;print"$e "}'<a.txt181 / 196 bytes:

Now it was time to put push arguments inplace and get rid of @p var at the cycle where we built %d. The second improvement was the usage of a list-in-quotes-to-string resolution instead of joining slices to build a key for %d (in both places).

perl -e'sub z{$_[rand@_]}@w=split" ",<>;push@w,@r=@w[0..2];%d=do{for(0..$#w-3){push@{$s{"@w[$_..$_+2]"}},$w[$_+3];}%s};print"@r ";for(1..200){$e=z(@{$d{"@r"}});shift@r;push@r,$e;print"$e "}'<a.txt177 / 192 bytes:

Here we stuck for a bit, having no good ideas on how to proceed further. However, we still found the way to get rid of 4 more bytes by removing the $e var (because we don’t need to store the whole chain, so we just keep last 3 words and print them ongoing) and switching the first for into a postfix notation with removing the unnecessary brackets.

perl -e'sub z{$_[rand@_]}@w=split" ",<>;push@w,@r=@w[0..2];%d=do{push@{$s{"@w[$_..$_+2]"}},$w[$_+3]for(0..$#w-3);%s};print"@r ";for(1..200){push@r,z(@{$d{"@r"}});shift@r;print"$r[-1] "}'<a.txt145 / 160 bytes:

At that moment we noticed we can disassemble the do() clause, which gave us a lot of advantage. Moreover, we could print words we just took out of @r, it allowed us to remove the explicit print for 3 prefix words because they will be printed from the main cycle anyway. Also, we decided to use the predefined input separator $/ instead of split.

perl -e'sub z{$_[rand@_]}$/=" ";@w=<>;push@w,@r=@w[0..2];push@{$s{"@w[$_..$_+2]"}},$w[$_+3]for(0..$#w-3);for(1..200){push@r,z(@{$s{"@r"}});print shift@r}'<a.txt136 / 151 bytes:

For a while, we tried to liquidate the z() subroutine explicit declaration somehow and now, finally, we managed to put it in place, where it was called from. Then we recalled the system var $/ which is predefined by default to the space symbol, so we used it instead of the implicit “ “ (quoted space) to spare one more byte.

Also, I realized what by the rules of the competition we need to print 50K+ words, not 200. Assuming our input file is long enough, I used its length in words as a target number of words to generate:

perl -e'$/=$";@w=<>;push@w,@r=@w[0..2];push@{$s{"@w[$_..$_+2]"}},$w[$_+3]for(0..$#w-3);for(@w){push@r,$s{"@r"}->[rand@{$s{"@r"}}];print shift@r}'<a.txt127 / 145 bytes:

Here we decided to set the default separator outside of the Perl code: one can use the interpreter’s argument -0 to set the value of $/ in the octal system (so -040 will be a space).

Plus, we found a couple of microoptimizatons:

it’s possible to omit -> when dereferencing an array in Perl (at least in Perl 5.26+),

it’s possible to replace $#w-3 with @w-4 having the same behavior.

perl -040e'@w=<>;push@w,@r=@w[0..2];push@{$s{"@w[$_..$_+2]"}},$w[$_+3]for(0..@w-3);for(@w){push@r,$s{"@r"}[rand@{$s{"@r"}}];print shift@r}'<a.txtNow we reached an important imaginary border — 127 bytes of payload, less than half of the initial goal. Is it possible to make it any better?

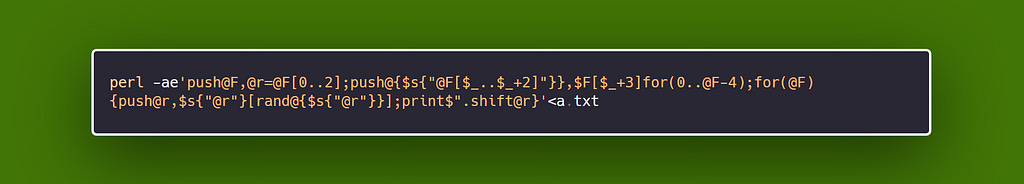

123 / 139 bytes:

There is an another useful Perl interpreter’s parameter, -a , which can be used to automatically split the input and assign it to the @F list. So we can use it to replace our whole input reading code, using @F everywhere instead of @w!

perl -ae'push@F,@r=@F[0..2];push@{$s{"@F[$_..$_+2]"}},$F[$_+3]for(0..@F-4);for(@F){push@r,$s{"@r"}[rand@{$s{"@r"}}];print$".shift@r}'<a.txtAaand… that’s it for now! :)

If you have any good ideas on how to squeeze it more — please write me some comments:)

Additional remark:

If we assume we can tweak the input file a little, we can copy it’s first three words to the end, like

(cat a.txt; cut -d’ ‘ -f1–3 a.txt; ) > b.txt

so now we don’t need the first push anymore, so we could have 116/132 bytes solution:

perl -ae'@r=@F[0..2];push@{$s{"@F[$_..$_+2]"}},$F[$_+3]for(0..@F-4);for(@F){push@r,$s{"@r"}[rand@{$s{"@r"}}];print$".shift@r}'<b.txtBut I do not think it’s fair, so the final result has 123/139 bytes code.

123 BYTES PERL MARKOV CHAIN was originally published in Altsoph’s blog on Medium, where people are continuing the conversation by highlighting and responding to this story.